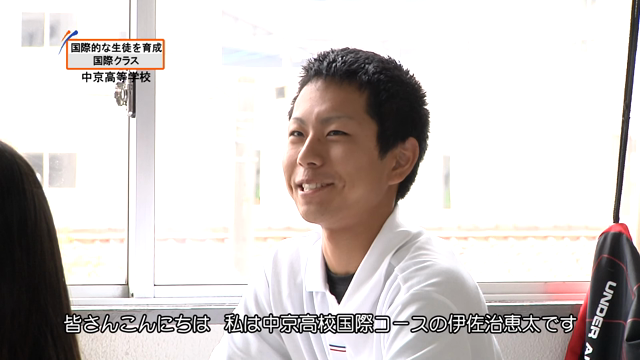

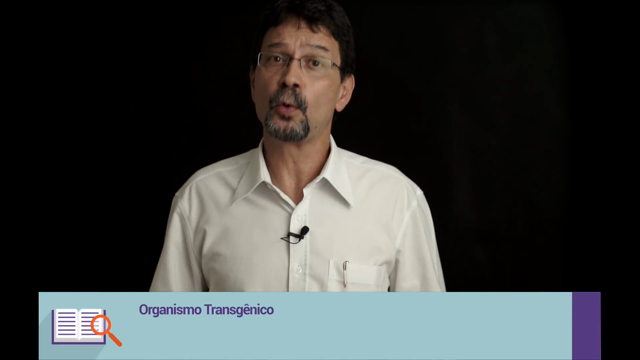

AVSpeech is a new, large-scale audio-visual dataset comprising speech video clips with no interfering backgruond noises. The segments are 3-10 seconds long, and in each clip the audible sound in the soundtrack belongs to a single speaking person, visible in the video. In total, the dataset contains roughly 4700 hours of video segments, from a total of 290k YouTube videos, spanning a wide variety of people, languages and face poses. For more details on how we created the dataset see our paper.

For discussions about the dataset, how to use and access it, etc., please check out our Google Group: avspeech-users.